Electrical resistance heating, aka, Joule Heating, is a microscopic phenomenon. The heating is caused by the flow of electrons (particles with energy associated with them, not waves ... but let's not get into quantum mechanical arguments) through a material conductor where the electrons undergo energy loss due to scattering from defects, crystal grain boundaries, impurities, etc, etc. When the electron is scattered, it deposits some of it's energy into the crystalline lattice as phonons (quantized vibrations of the individual atoms). Phonon energy is directly related to temperature and heating.

Therefore, if the power is expressed as

I2R, then anything you can do to lower the current,

I, greatly helps reduce the Joule Heating because you have fewer electrons flowing and banging into things inside the conductor. This is why 220V is preferred because it allows you to deliver the same power but at lower current which then allows you to reduce the size of the conductor. Since there is less current, there is less Joule heating and lower loss. Now, at higher voltage the individual electrons have higher energy, but there isn't an exact trade-off between the energy that the electrons deposit and the numbers of them flowing inside of a conductor. Because collisions inside the conductor cause greater energy deposition into the lattice as opposed to energy being coupled into the lattice by non-phonon processes, you get less heating even though the power is the same.

Westinghouse and Edison would be very proud of the arguments we are making in this thread ... but we all know who won the AC-DC power transmission wars

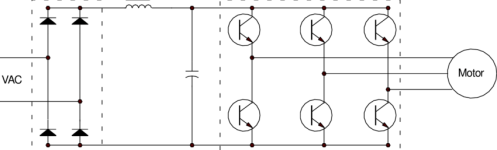

I suppose if the outlet is rated for 15A, thats cutting it close at 13A. Since its variable speed I wouldnt expect a huge spike in startup current though.